Alleviating label switching with optimal transport

Authors

Authors

- Farzaneh Mirzazadeh

- Justin Solomon

- Mikhail Yurochkin

- Pierre Monteiller

- Sebastian Claici

- Edward Chien

- Farzaneh Mirzazadeh

- Justin Solomon

- Mikhail Yurochkin

Authors

- Farzaneh Mirzazadeh

- Justin Solomon

- Mikhail Yurochkin

- Pierre Monteiller

- Sebastian Claici

- Edward Chien

- Farzaneh Mirzazadeh

- Justin Solomon

- Mikhail Yurochkin

Published on

12/10/2019

Categories

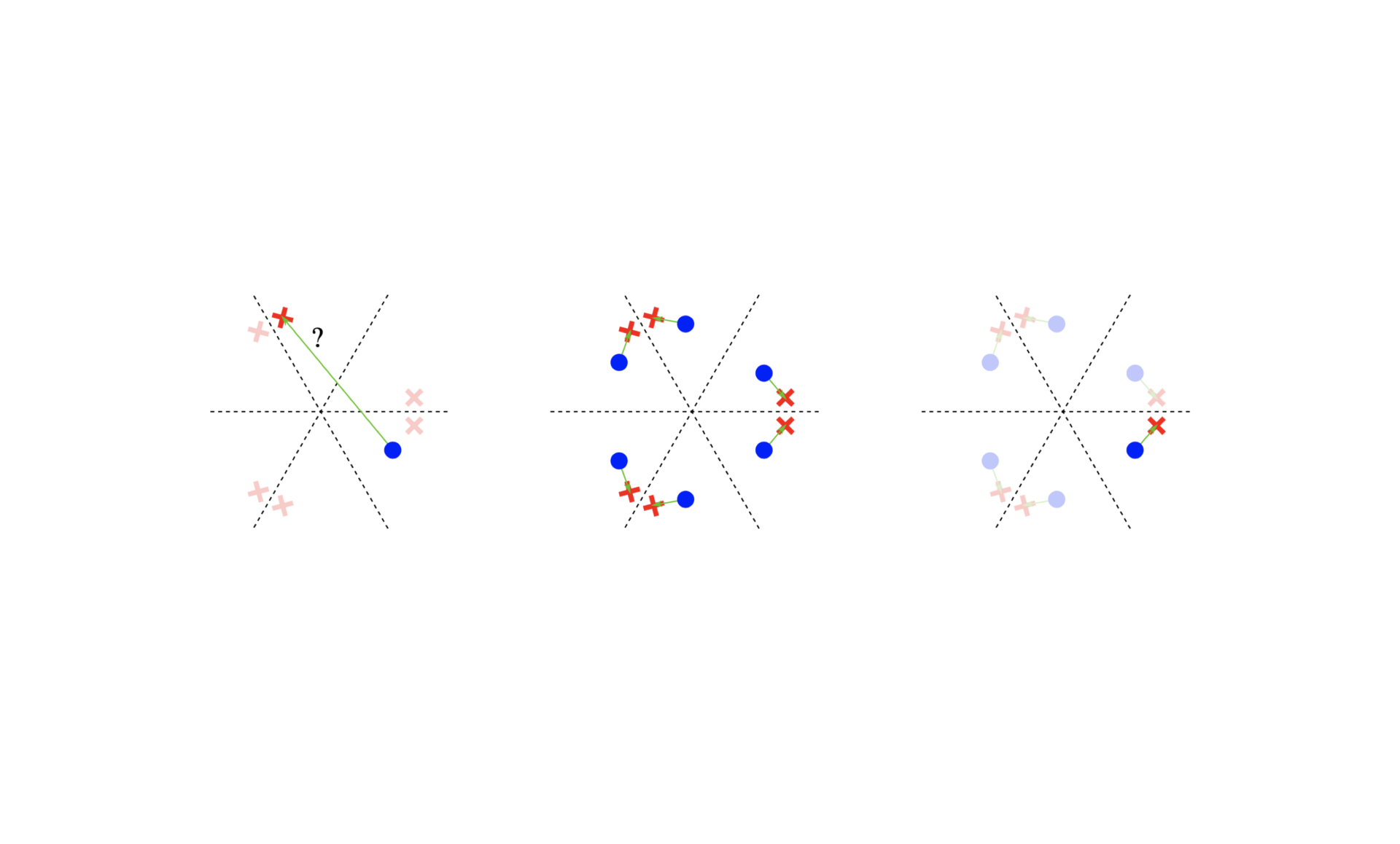

Label switching is a phenomenon arising in mixture model posterior inference that prevents one from meaningfully assessing posterior statistics using standard Monte Carlo procedures. This issue arises due to invariance of the posterior under actions of a group; for example, permuting the ordering of mixture components has no effect on posterior likelihood. We propose a resolution to label switching that leverages machinery from optimal transport. Our algorithm efficiently computes posterior statistics in the quotient space of the symmetry group. We give conditions under which there is a meaningful solution to label switching and demonstrate advantages over alternative approaches on simulated and real data.

In this post, we share a brief Q&A with the authors of the paper, Alleviating Label Switching with Optimal Transport, presented at NeurIPS 2019.

What is your paper about?

Markov chain Monte Carlo is the prominent technique for posterior estimation in Bayesian inference that provides accurate uncertainty estimates crucial for safety-critical applications. Unfortunately, for many important Bayesian models, posterior summarization—for example computing the mean—is challenging due to label switching. This issue arises because of certain invariances of the model. In this paper, we use machinery from optimal transport to propose a computationally efficient and theoretically justified solution to label switching.

What is new and significant about your paper?

Label switching is a long-standing open problem. Our work is the first to approach it using optimal transport, which provides an elegant lens through which we can analyze and formulate a resolution for the problem.

What will the impact be on the real world?

Our techniques can be readily implemented into modern probabilistic programming languages such as Stan. This will allow practitioners to have easier ways to analyze important Bayesian models, for example mixture models, without the need for ad-hoc fixes.

What would be the next steps?

Our methodology and theory are potentially applicable to other problems with intrinsic invariances. For example, it can help to learn molecular structures from cryogenic electron microscopy (cryo-EM).

What surprised you the most about your findings?

Efficiency of the algorithm given seemingly computationally intense Wasserstein barycenter problem.

What was the most interesting thing you learned?

Wasserstein barycenter provides meaningful estimate for the posterior mean of the mixture model and can be computed “on the fly.”

How would you describe your paper in less than 5 words?

Quotient then optimize. No ambiguity.

Who would you like to thank?

In addition to support from IBM, Solomon’s group acknowledges the generous support of Army Research Office grant W911NF1710068, Air Force Office of Scientific Research award FA9550-19-1-031, of National Science Foundation grant IIS-1838071, from an Amazon Research Award, from the MIT-IBM Watson AI Laboratory, from the Toyota-CSAIL Joint Research Center, from the QCRI–CSAIL Computer Science Research Program, and from a gift from Adobe Systems.

Please cite our work using the BibTeX below.

@misc{monteiller2019alleviating,

title={Alleviating Label Switching with Optimal Transport},

author={Pierre Monteiller and Sebastian Claici and Edward Chien and Farzaneh Mirzazadeh and Justin Solomon and Mikhail Yurochkin},

year={2019},

eprint={1911.02053},

archivePrefix={arXiv},

primaryClass={cs.LG}

}