EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs

Authors

Authors

- Aldo Pareja

- Jie Chen

- Giacomo Domeniconi, Ph.D.

- Tengfei Ma, Ph.D.

- Toyotaro Suzumura, Ph.D.

- Hiroki Kanezashi

- Tim Kaler

- Tao B. Schardl, Ph.D.

- Charles E. Leiserson, Ph.D.

Edited By

Authors

- Aldo Pareja

- Jie Chen

- Giacomo Domeniconi, Ph.D.

- Tengfei Ma, Ph.D.

- Toyotaro Suzumura, Ph.D.

- Hiroki Kanezashi

- Tim Kaler

- Tao B. Schardl, Ph.D.

- Charles E. Leiserson, Ph.D.

Edited By

Published on

11/18/2019

Categories

Imagine trying to reason about something without any context. It’s possible, but your understanding will be limited and brittle. That’s because relationships between things give us critical information. What’s more, in complex systems, these relationships are always changing. In mathematics, we can model relational data as a graph or network structure — nodes, edges, and the attributes associated with each. But to date, deep learning on graph structured data has lagged, especially on dynamic graphs. In our paper, EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs, published in AAAI 2020, we propose a new method for building graph deep learning models for handling evolutionary dynamism over time.

Toward dynamic graph deep learning

While deep learning has done remarkable things on Euclidean data (e.g. audio, images, video) graph deep learning has lagged because combinatorial complexity and nonlinearity issues making training very difficult and expensive. Yet it’s precisely the information hidden in that complexity that makes graphs so interesting. That has begun to change in recent years with scalability advancements in graph deep learning as Graph Convolutional Networks (Kipf & Welling) and FastGCN (Chen, Ma, & Xiao).

Graph neural networks (GNN) have thus far focused on static graphs. Real-life applications, however, often involve dynamically evolving graphs. For example, a bank conducting anti-money laundering programs must continually analyze millions or even billions of transactions per day. Were that bank to use graph learning, the vectorial representations of the users would have to be updated accordingly to reflect the temporal evolution of their network relationships. This urges the development of dynamic graph methods that encode the temporal evolution of relational data.

As we strive for a shift from “narrow AI” systems to “broad AI” ones with more robust capabilities, this ability to handle dynamism in complex systems emerges as a key theme.

Introducing EvolveGCN

In our paper, EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs, published in AAAI 2020, we propose EvolveGCN, which adapts the graph convolutional network (GCN) model along the temporal dimension without resorting to node embeddings.

Combining Graph Neural Network (GNN) with Recurrent Neural Network (RNN) is a natural idea. Typical approaches use the GNN as a feature extractor and use an RNN to learn the dynamics from the extracted node features. We instead use the RNN to evolve the GNN, so that the dynamism is captured in the evolving network parameters. One advantage is that it handles more flexibly dynamic data, because a node does not need to be present all time around.

In EvolveGCN, we update the GCN parameters instead of post-updating the GCN embeddings. That makes the model flexible to adapt to different sizes of graphs across the time.

Figure 1: Schematic illustration of EvolveGCN. The RNN means a recurrent architecture in general (e.g., GRU, LSTM). We suggest two options to evolve the GCN weights, treating them with different roles in the RNN

Weight Evolution

At the heart of the EvolveGCN is the update of the weight matrix at each time step. We provide two options for performing this task.

The first option is to treat the weight matrix as the hidden state of the dynamical system. We use a gated recurrent unit (GRU) to update the hidden state. We call this approach EvolveGCN-H and it is illustrated as follows:

EvolveGCN-H

EvolveGCN-H

The second option is to treat the weight matrix as the output of the dynamical system. This then becomes the input at the subsequent time step. We use a long short-term memory (LSTM) cell to model this input-output relationship. The LSTM itself maintains the system information by using a cell context, which acts like the hidden state of a GRU. In this version, node embeddings are not used at all. We call this EvolveGCN-O and it is illustrated follows:

EvolveGCN-O

EvolveGCN-O

EvolveGCN experiments on dynamic graphs

We used seven dynamic graph datasets for three types of tasks:

- Link prediction: (SBM, BC-OTC, BC-Alpha, UCI, AS). The task of link prediction is to leverage information up to time *t* and predict the existence of an edge *(u, v)* at time *t + 1*. At least one version of EvolveGCN achieves the best result for each of the data sets SBM, UCI, and AS. For BC-OTC and BC- Alpha, EvolveGCN also outperforms the two GCN related baselines, but it is inferior to DynGEM and dyngraph2vec. These latter methods differ from others in that node embeddings are obtained in an unsupervised manner. It is surprising that unsupervised approaches are particularly good on certain data sets, given that the link prediction model is trained separately from graph autoencoding. In such a case, graph convolution does not seem to be sufficiently powerful in capturing the intrinsic similarity of the nodes, rendering a much inferior starting point for dynamic models to catch up. Although EvolveGCN improves over GCN substantially, it still does not reach the bar set by graph autoencoding.

Evolve GCN link prediction

- Edge classification: (BC-OTC, BC-Alpha, and Reddit). Predicting the label of an edge *(u, v)* at time *t* is done in almost the same manner as link prediction. The F1 scores across different methods are compared below. In all cases, the two EvolveGCN versions outperform GCN and GCN-GRU. Moreover, similar observations are made for the precision and the recall, which are omitted due to space limitation. These appealing results corroborate the effectiveness of the proposed method.

EvolveGCN edge classification

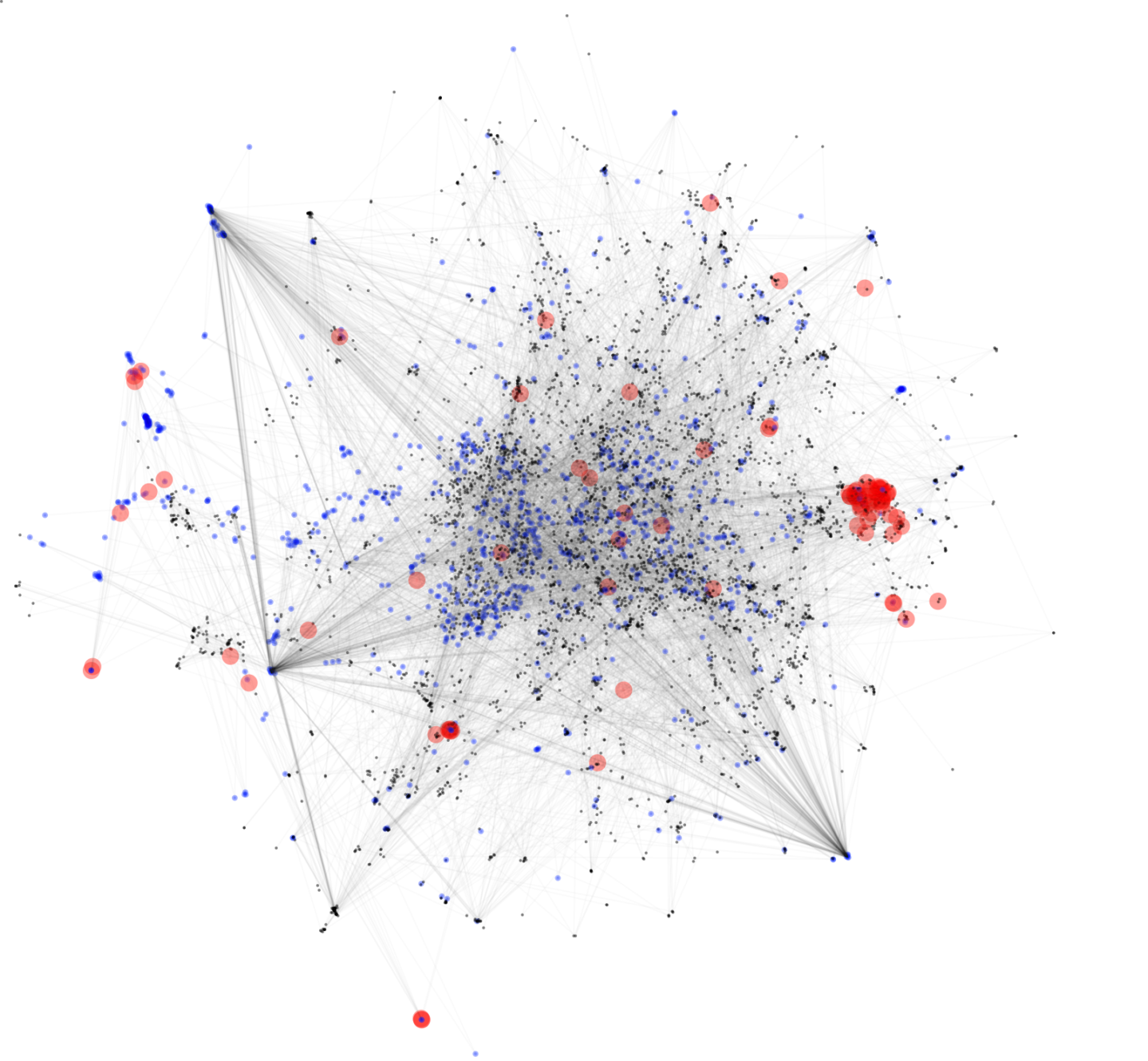

- Node classification: (Elliptic). Predicting the label of a node u at time t follows the same practice of a standard GCN: the activation function of the last graph convolution layer is the softmax function. Publicly available data sets for node classification in the dynamic setting are rare. We use only one data set (Elliptic) for demonstration. This data set is the largest one in node count in Table 1. The F1 scores for the data set Elliptic are plotted also in Figure 3. In this data set, the classes correspond to licit and illicit transactions respectively and they are highly skewed. For financial crime forensic, the illicit class (minority) is the main interest. Hence, we plot the minority F1. The micro averages are all higher than 0.95 and not as informative. One sees that EvolveGCN-O performs better than the static GCN, but not so much as GCN-GRU. Indeed, dynamic models are more effective. For an interesting phenomenon, we plot the history of the F1 scores along time in Figure 4. All methods perform poorly starting at step 43. This time is when the dark market shutdown in Bitcoin occurred. Such an emerging event causes performance degrade for all methods, with non-dynamic models suffering the most. Even dynamic models are not able to perform reliably, because the emerging event has not been learned.

EvolveGCN node classification

Summary

Graphs are ubiquitous in the world. In practical scenarios, we are often faced with graphs that are constantly evolving, rather than being conveniently static for a once-for-all investigation. EvolveGCN provides a new aspect to evolve the graph neural network along such temporal axes.

Please cite our work using the BibTeX below.

@article{pareja2020evolvegcn,

title={Evolvegcn: Evolving graph convolutional networks for dynamic graphs},

author={Pareja, Aldo and Domeniconi, Giacomo and Chen, Jie and Ma, Tengfei and Suzumura, Toyotaro and Kanezashi, Hiroki and Kaler, Tim and Leisersen, Charles E},

journal={AAAI},

year={2020}

}