Prasanna Sattigeri

Research Staff Member

New tricks from old dogs: multi-source transfer learning

Authors

Authors

Edited by

Authors

Edited by

Published on

12/10/2019

In our paper, Learning New Tricks From Old Dogs: Multi-Source Transfer Learning From Pre-Trained Networks, presented in NeurIPS 2019, we contribute a new method for fusing the features learned by existing neural networks to produce efficient, interpretable predictions for novel target tasks. We call this method maximal correlation weighting (MCW) because it leverages the principal of maximal correlations and conditional expectation operators to build a classifier from an appropriate weighting of the feature functions from the source networks. This is especially useful in cases where the original training data for these nets are lost and the nets themselves cannot be modified.

It’s the holiday season and you’re seeking some personal-professional life wisdom from family. Your mom’s two siblings couldn’t be less alike. Your aunt hitchhiked to California when she was 18, lived in a commune, never married and now runs an artist asylum. Her brother stayed in Ohio to run the small family auto shop, raise a few kids, and watch football on weekends. They are both smart and sharply opinionated. Who do you go to for advice, or are both of them too different from you to even learn from?

If you only listened to one of them, you’ll get a pretty narrow view of the world. If you disregard them you’ll miss out on the unique seeds of wisdom each of them has. The key is to take both, each with a grain of salt, integrate them with your own thoughts into collective wisdom for your own life journey.

This is multi-source transfer learning: applying the knowledge gained from multiple domain sources. Pre-trained neural networks are everywhere these days, but each tends to have a very narrow view of the world, not unlike your aunt or uncle. Taken individually, these “old dog” networks are often quite brittle and unhelpful. Taken together, though, they can teach us quite a lot.

Today, most transfer learning methods require some kind of control over the systems learned, either by enforcing constraints during the source training, or through the use of a joint optimization objective between tasks that requires all data be co-located for training. However, in a variety of settings, such as where privacy is a factor, we may have no control over the individual source task training, nor access to source training samples. We only have access to features pre-trained on such data as the output of “black-boxes.”

In our paper, propose a new method called maximal correlation weighting (MCW) for fusing the features learned by existing neural networks to produce efficient, interpretable predictions for novel target tasks. MCW leverages the principal of maximal correlations and conditional expectation operators to build a classifier from an appropriate weighting of the feature functions from the source networks. This is especially useful in cases where the original training data for these nets are lost and the nets themselves cannot be modified.

For example: consider the multi-source learning problem of training a classifier using an ensemble of pre-trained neural networks for a set of classes that have not been observed by any of the source networks, and for which we have very few training samples. By using these distributed networks as feature extractors, we can train an effective classifier in a computationally-efficient manner using MCW. See the paper for results the image benchmark datasets CIFAR-100, Stanford Dogs, and Tiny ImageNet, formulated as cases where each pre-trained network is trained on a different initial task than the final target task of interest. We also use the methodology to characterize the relative value of different source tasks in learning a target task.

How it works

Speaking of old dogs, our methodology is based on the use of maximal correlation analysis, which originated with the work of Hirschfeld in … 1935!

It has been further developed in a wide range of subsequent work , including by Gebelein and Rényi, and as a result is sometimes referred to as Hirschfeld-Gebelein-Renyi (HGR) maximal correlation analysis. We provide more discussion on this in a separate paper called On Universal Features for High-Dimensional Learning and Inference.

This foundation allows us to formulate a predictor for the target

labels:

![]()

![Rendered by QuickLaTeX.com \[\Ph_{Y|X}(y|x) = \Ph^t_Y(y) \left(1+\sum_{n,i}\sigma_{n,i}f^{s_n}_i(x)g^{s_n}_i(y)\right),\]](https://mitibmwatsonailab.mit.edu/wp-content/ql-cache/quicklatex.com-d1b2d95316046d4d15ea1d55833741b1_l3.png)

from which are classification ![]() for a given test sample

for a given test sample ![]() is

is

![Rendered by QuickLaTeX.com \[\yh = \argmax_{y} \Ph_{Y|X}(y|x) = \argmax_{y} \Ph^t_Y(y)\left(1+ \sum_{n,i}\sigma_{n,i}f^{s_n}_i(x)g^{s_n}_i(y)\right),\]](https://mitibmwatsonailab.mit.edu/wp-content/ql-cache/quicklatex.com-697cd4c3fb7cb0139abdd4422326b5e7_l3.png)

where ![]() is an estimate of the target label distribution.

is an estimate of the target label distribution.

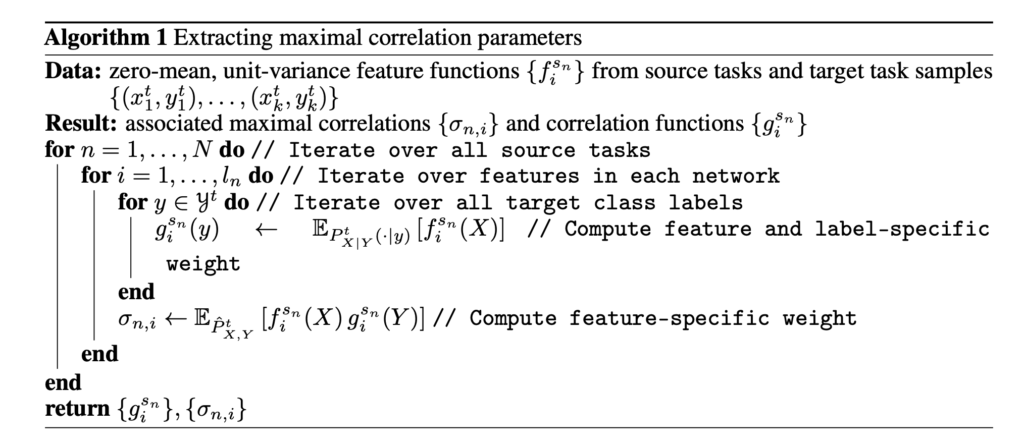

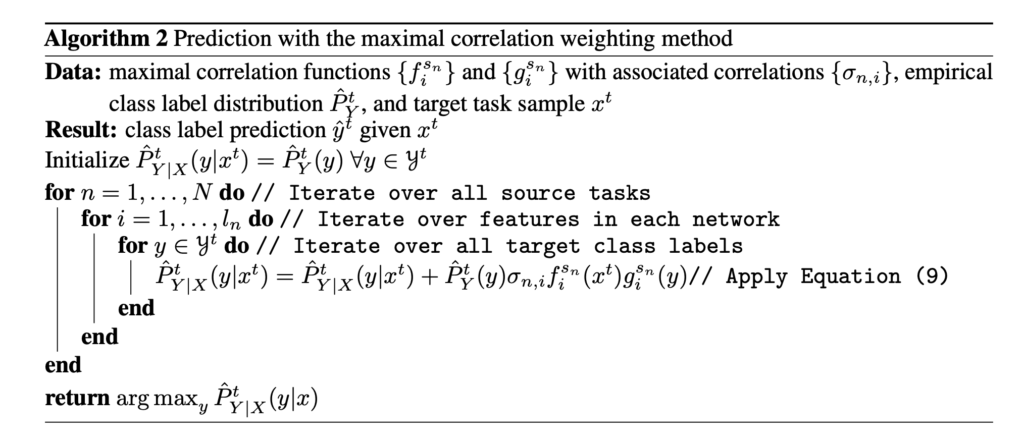

Then we proceed to construct the following two algorithms for learning the MCW parameters (Algorithm 1) and for computing the MCW predictions (Algorithm 2).

Note that computing the empirical conditional expected value requires a single pass through the data, and so has linear time complexity in the number of target samples. We also need to compute one conditional expectation for each feature function.

Key features and nice surprises of Maximal Correlation Weighting

Three key features make MCW valuable here. First, it’s very lightweight, requiring only a single pass through the data to compute weights. Second, MCW uses decoupled weights, allowing for the aggregate predictor to achieve higher accuracy in the few-shot regime. Third, MCW provides a “maximal correlation score,” which indicates how important different features/source networks are in influencing the final prediction. This can be used in the source selection problem to determine which source networks are vital in making good predictions and which are more superfluous with only a few target task samples.

One nice surprise in this work is that MCW proves to be more effective over more complex neural nets which required more complex optimization techniques to train, as compared to our method which only performs simple, closed-form computations to exactly optimize our objective.

Though it seems obvious in hindsight, it is fascinating to see the difference in effect that networks with different maximal correlation scores had on the quality of the final predictor. While removing networks with low scores from the ensembles would have little to no effect, removing those with high scores would result in significant drops in accuracy for target classification.

Reflection

In addition the presenting a new method here, we reflect on two important lessons from this work.

First, “old dogs” have a lot to offer us in wisdom that, once properly fused, can be applied to new tasks.

Second, simple theory sometimes beats overly complicated architectures. In this case, by leveraging Hirschfeld-Gebelein-Rényi maximal correlation, we were able to develop a fast, easily-computed method for combining the features extracted by “old dog” neural networks to build a classifier for new target tasks. This success across multiple datasets serves as a helpful reminder to stay mindful of first principles and the importance of theory in experimental work.

In other words, don’t be afraid to pick up a book from 1935 now and again.

Please cite our work using the BibTeX below.

@incollection{NIPS2019_8688,

title = {Learning New Tricks From Old Dogs: Multi-Source Transfer Learning From Pre-Trained Networks},

author = {Lee, Joshua and Sattigeri, Prasanna and Wornell, Gregory},

booktitle = {Advances in Neural Information Processing Systems 32},

editor = {H. Wallach and H. Larochelle and A. Beygelzimer and F. d\textquotesingle Alch\'{e}-Buc and E. Fox and R. Garnett},

pages = {4372--4382},

year = {2019},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8688-learning-new-tricks-from-old-dogs-multi-source-transfer-learning-from-pre-trained-networks.pdf}

}Photo Credit

Authors