Using geometry to understand documents

Authors

Authors

- Mikhail Yurochkin

- Zhiwei Fan

- Aritra Guha

- Paraschos Koutris

- XuanLong Nguyen

Authors

- Mikhail Yurochkin

- Zhiwei Fan

- Aritra Guha

- Paraschos Koutris

- XuanLong Nguyen

Published on

12/10/2019

Categories

In this post, we share a brief Q&A with the authors of the paper, Scalable inference of topic evolution via models for latent geometric structures, presented at NeurIPS 2019.

Scalable inference of topic evolution via models for latent geometric structures

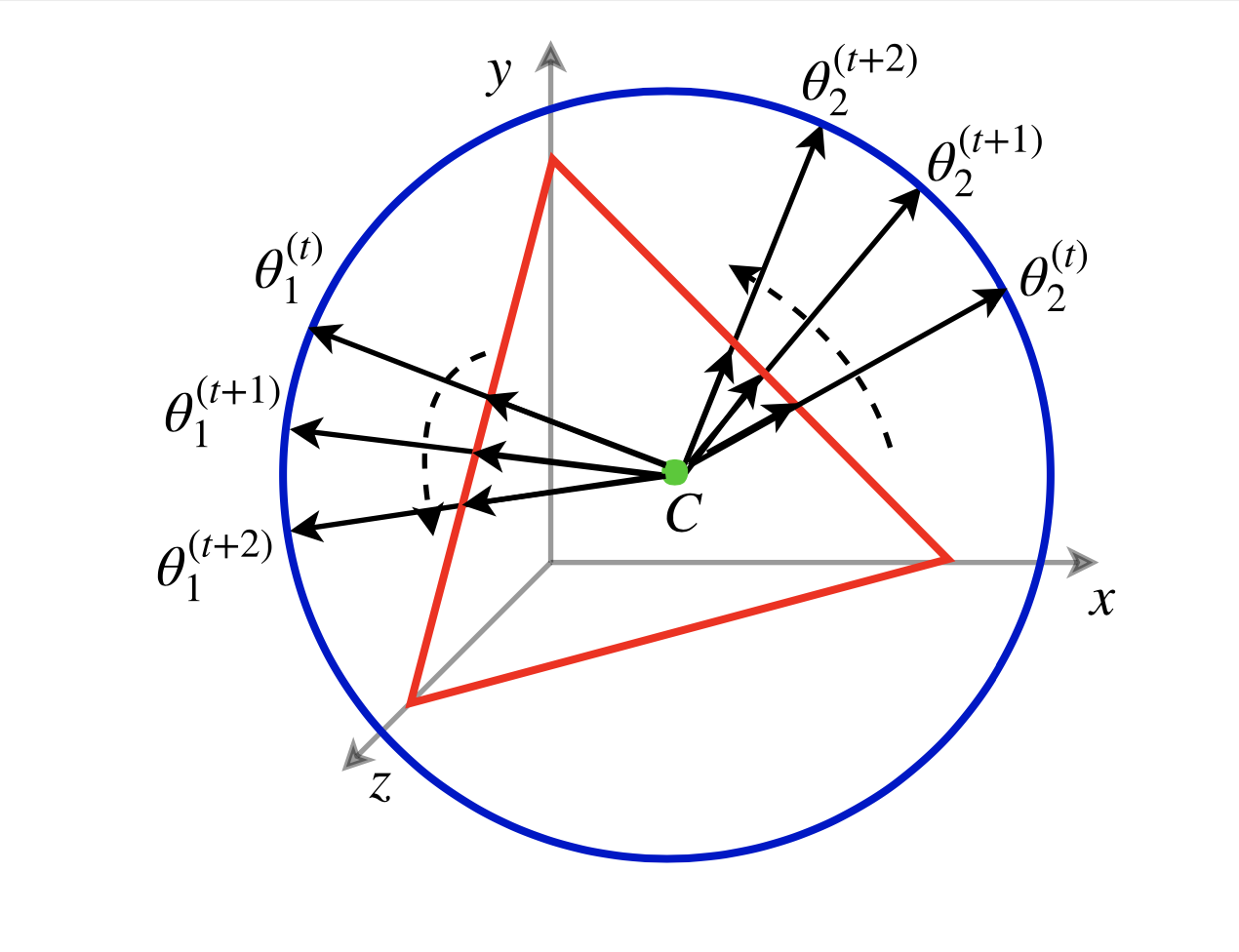

Abstract: We develop new models and algorithms for learning the temporal dynamics of the topic polytopes and related geometric objects that arise in topic model based inference. Our model is nonparametric Bayesian and the corresponding inference algorithm is able to discover new topics as the time progresses. By exploiting the connection between the modeling of topic polytope evolution, Beta-Bernoulli process and the Hungarian matching algorithm, our method is shown to be several orders of magnitude faster than existing topic modeling approaches, as demonstrated by experiments working with several million documents in under two dozens of minutes.

What is your paper about?

We consider the problem of fusing topic models learned from data partitioned into groups and/or in time. For example, news articles with timestamps can be attributed to a specific geographic area. Each portion of news articles can be processed in parallel using standard topic modeling techniques and fused into a nonparametric dynamic topic model using our approach. Our models allow topics to appear or become dormant as the time progresses.

What is new and significant about your paper?

We propose three novel Bayesian nonparametric models for fusion of topic models. Our inference procedure is very fast and together with the fusion perspective and geometric topic inference leads to state-of-the-art run-times. We demonstrate nonparametric and dynamic topic modeling of about 3 million documents in 20 minutes. As part of our techniques, we also propose a new geometric formulation of topic models by embedding them into a sphere.

What will the impact be on the real world?

Modern text datasets are large and often have natural grouping structure (geographically, by publisher, etc.). It is crucial for topic models to scale and account for data heterogeneity. Our approach provides a practical solution.

What would be the next steps?

Our algorithms enable fusion of other Machine Learning techniques and are suitable for the emerging Federated Learning applications. In the concurrent works we have already extended our Distributed Matching approach to federated learning of neural networks, Gaussian processes, mixture models and hidden Markov models. An interesting future work is to extend our dynamic approaches to fuse other models learned from data partitioned in time. Another direction is to develop fusion of posterior distributions using optimal transport.

What surprised you the most about your findings?

Bayesian nonparametrics allows for flexible models, however often results in computationally demanding inference. On the contrary, in this paper we utilized Bayesian nonparametrics to enable very fast inference.

What was the most interesting thing you learned?

We found an intriguing connection between modeling with Indian Buffet Process and inference using Hungarian algorithm.

How do you see this research evolving in the next 5 years?

Our paper emphasizes strengths of fusion perspective for learning from diverse datasets, and geometric topic modeling. We believe these research directions will lead to new exciting results in the future.

Who would you like to thank?

I (Zhiwei Fan) would like to thank all my co-authors. I have learned a lot from them during the project. I would like to especially thank Mikhail, who has given me a lot of insight, making it possible to integrate ideas from different research areas (my primary research areas are database management and big data analytics).

Please cite our work using the BibTeX below.

@incollection{NIPS2019_8438,

title = {Hierarchical Optimal Transport for Document Representation},

author = {Yurochkin, Mikhail and Claici, Sebastian and Chien, Edward and Mirzazadeh, Farzaneh and Solomon, Justin M},

booktitle = {Advances in Neural Information Processing Systems 32},

editor = {H. Wallach and H. Larochelle and A. Beygelzimer and F. d\textquotesingle Alch\'{e}-Buc and E. Fox and R. Garnett},

pages = {1601--1611},

year = {2019},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8438-hierarchical-optimal-transport-for-document-representation.pdf}

}