Sign-OPT: Defending the hard-label black-box cyber attack

Authors

Authors

- Minhao Cheng

- Simranjit Singh

- Pin-Yu Chen

- Sijia Liu

- Cho-Jui Hsieh

Edited by

Authors

- Minhao Cheng

- Simranjit Singh

- Pin-Yu Chen

- Sijia Liu

- Cho-Jui Hsieh

Edited by

Published on

09/24/2019

Categories

Cybersecurity is one of the greatest obstacles to safely introducing AI systems in industry, government, and society. Adversarial machine learning is the subfield of AI focused on stress-testing AI models by attacking them. In our paper, Sign-OPT: A Query-Efficient Hard-label Adversarial Attack, published in ICLR 2020, we consider the most challenging and practical attack setting: the hard-label black-box attack. This is where the model is hidden to the attacker and the attacker can only make queries to get the corresponding hard-label decisions (e.g., predicted labels) of the model. In this work, we propose a novel optimization algorithm called Sign-OPT for executing such an attack. By sharing it here with the community, we can all see how to identify and guard against such attacks.

The hard-label black-box cyber attack

In the “hard-label black-box attack” setting for generating adversarial machine learning examples of cyber attacks, limited model queries are allowed and only the decision is provided to a queried data input. This is considered to be the most difficult and also the most practical setting based on general real-world assumptions. Several algorithms have been proposed for this problem but they typically require huge amounts (>20,000) of queries for attacking a single example. Among them, the state-of-the-art approach (Opt-attack) showed that hard-label attack can be modeled as an optimization problem where the objective function can be evaluated by binary search with additional model queries, thereby a zeroth order optimization algorithm can be applied.

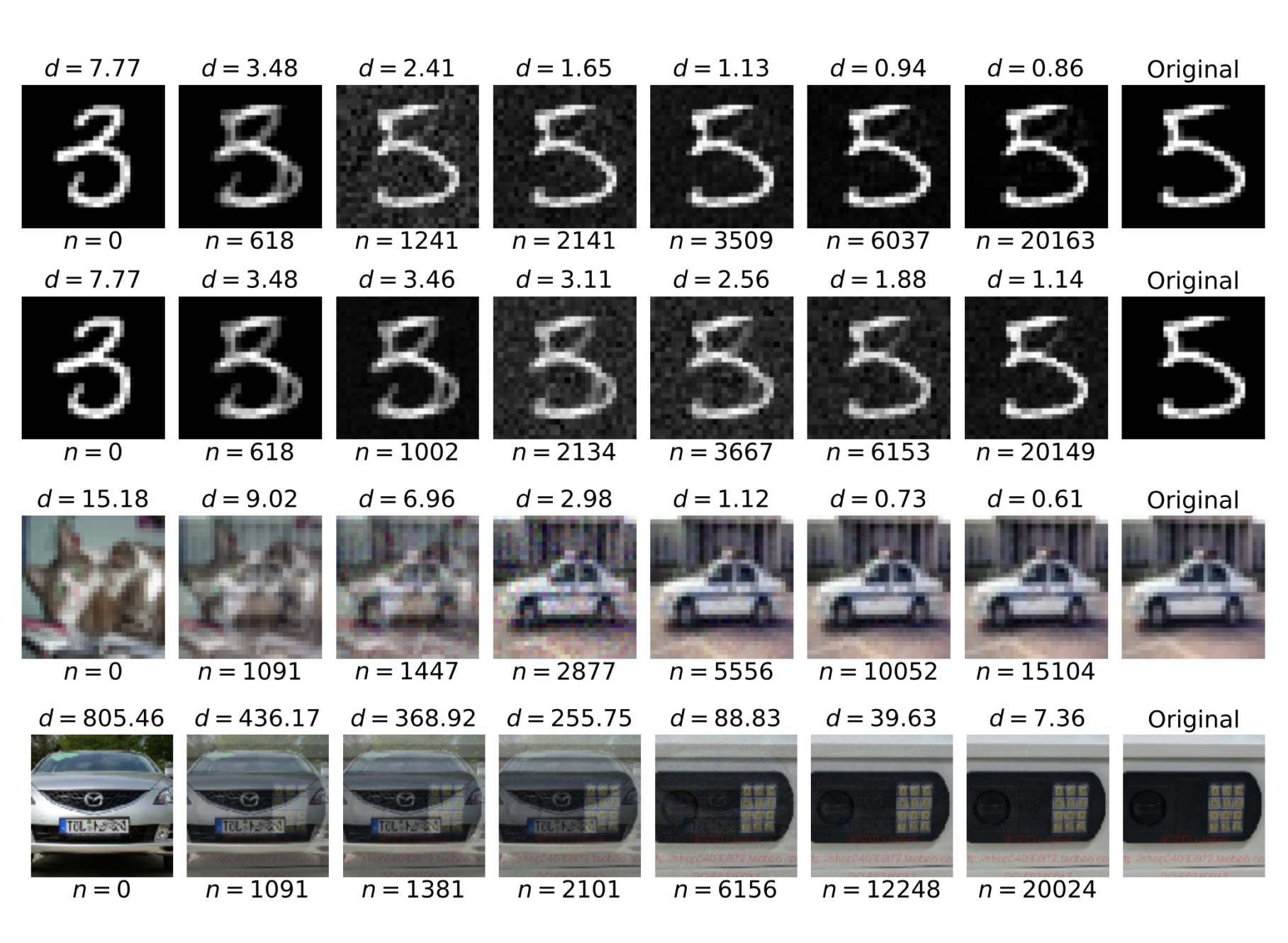

In this paper, we adopt the same optimization formulation but propose to directly estimate the sign of the gradient at any direction instead of the gradient itself. Using this approach, we develop a query-efficient algorithm called Sign-OPT for the hard-label black-box attack. We provide a convergence analysis of the new algorithm and conduct experiments on several models on MNIST, CIFAR-10 and ImageNet. We find that a Sign-OPT attack consistently requires 5X to 10X fewer queries when compared to the current state-of-the-art approaches, and usually converges to an adversarial example (i.e. an instance where the model is effectively compromised) with smaller perturbations.

Alternative adversarial machine learning attacks

A commonly used algorithm proposed in the hard-label black-box attack setting is called Boundary attack (Brendel et al., 2017). It is based on random walks on the decision surface, but it does not have any convergence guarantee. More recently, Cheng et al. (2019) showed that finding the minimum adversarial perturbation in the hard-label setting can be reformulated as another optimization problem. However, instead of using finite differences to estimate the magnitude of directional derivative, we propose to evaluate its sign using only a single query. With this single-query sign oracle and the corresponding algorithm, we can theoretically prove and empirically demonstrate a significant reduction in the number of queries required for hard-label black box attack. Moreover, we note that Liu et al. (2019) designed a Zeroth Order SignSGD algorithm for soft-label black box attack (not hard-label setting). They demonstrated a comparable or even better convergence rate than zeroth order stochastic gradient descent by using only sign information of gradient estimation. Although it is possible to combine ZO-SignSGD with our proposed single query oracle for solving hard-label attack, their estimator will take the sign of the whole vector and thus ignore the direction of perturbation vector, which leads to slower convergence in practice.

The Sign-OPT attack

Sign-OPT is novel in that it can be viewed as a new zeroth order optimization algorithm that features fast convergence of signSGD. Instead of directly taking the sign of gradient estimation, it algorithm utilizes the scale of random direction. This makes existing analysis inappropriate to our case, so we provide a new recipe to prove the convergence of this new optimizer.

By sampling random Gaussian vector ![]() times, we can estimate the imperfect gradient by

times, we can estimate the imperfect gradient by

(1)

which only requires ![]() queries.

queries.

We then use this imperfect gradient estimate to update our search direction ![]() as

as ![]() with a step size

with a step size ![]() and use the same search procedure to compute

and use the same search procedure to compute ![]() up to a certain accuracy. The detailed procedure is shown in the Sign-OPT algorithm, defined as follows:.

up to a certain accuracy. The detailed procedure is shown in the Sign-OPT algorithm, defined as follows:.

- Hard-label model

, original image

, original image  , initial

, initial

-

At iteration

, randomly sample

, randomly sample  from a Gaussian distribution

from a Gaussian distribution - Compute

- Update

- Evaluate

using the query-efficient search algorithm proposed by Cheng, et. al.

using the query-efficient search algorithm proposed by Cheng, et. al.

Experiments

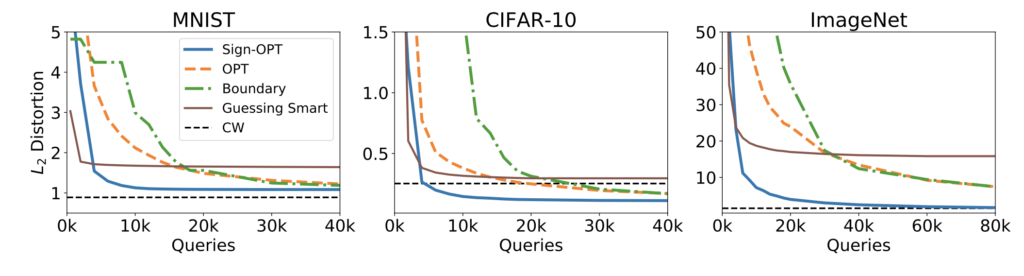

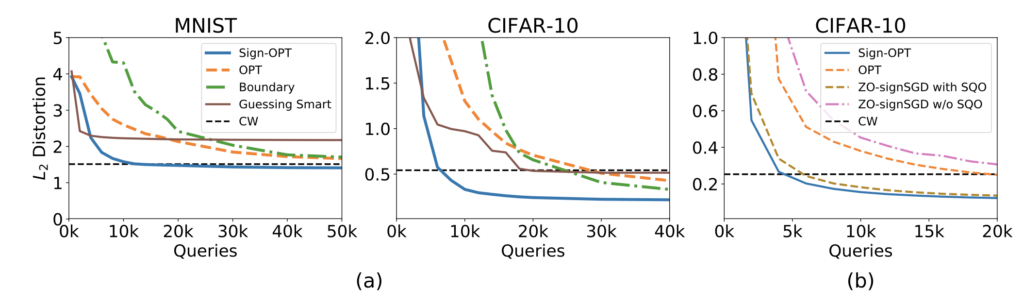

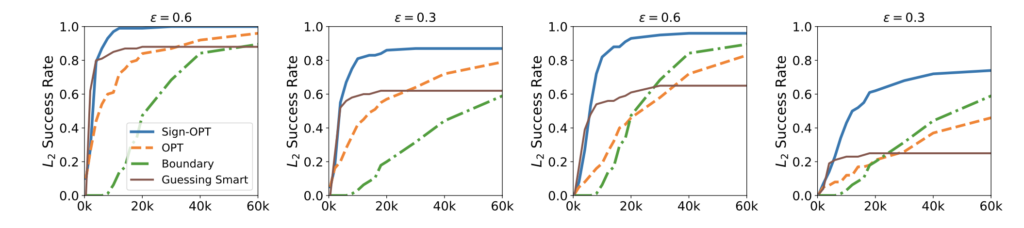

In our experiments, we provide a convergence analysis of the Sign-OPT algorithm for several models on MNIST, CIFAR-10 and ImageNet. We show that the proposed algorithm consistently reduces the query count by 5–10 times across different models and datasets. This suggests Sign-OPT is a practical and query-efficient robustness evaluation tool. Furthermore, on most datasets our algorithm can find an adversarial example with smaller distortion compared with previous approaches.

Figure 4: Untargeted attack: Median distortion vs Queries for different datasets.

Figure 5: (a) Targeted Attack: Median distortion vs Queries of different attacks on MNIST and CIFAR-10. (b) Comparing Sign-OPT and ZO-SignSGD with and without single query oracle (SQO).

Figure 6: Success Rate vs Queries for CIFAR-10 (L2 norm-based attack). First two and last two depict untargeted and targeted attacks respectively. Success rate threshold is at the top of each plot.

Summary

We generally speak of Efficient AI in positive terms of making AI more practical because deep learning systems are so expensive and energy-intensive to deploy. In cybersecurity and adversarial machine learning, we see the double-edged sword: the more efficient an attack, the more frequently and pervasively it can be executed. The Sign-OPT algorithm, as single-query sign oracle, provides a new benchmark on design of a highly efficient hard-label black box attack. We urge our fellow researchers and cybersecurity professionals to review this attack and build safeguards against it when developing deep learning systems.

Hard-label black-box attack: an adversarial attack designed for a black-box neural network using only binary decisions rather than the probability for class-wise classification.

Please cite our work using the BibTeX below.

@inproceedings{

Cheng2020Sign-OPT:,

title={Sign-OPT: A Query-Efficient Hard-label Adversarial Attack},

author={Minhao Cheng and Simranjit Singh and Patrick H. Chen and Pin-Yu Chen and Sijia Liu and Cho-Jui Hsieh},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://openreview.net/forum?id=SklTQCNtvS}

}