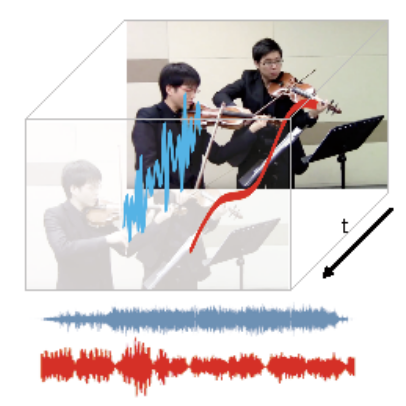

Sounds originate from object motions and vibrations of surrounding air. Inspired by the fact that humans is capable of interpreting sound sources from how objects move visually, we propose a novel system that explicitly captures such motion cues for the task of sound localization and separation. Our system is composed of an end-to-end learnable model called Deep Dense Trajectory (DDT), and a curriculum learning scheme. It exploits the inherent coherence of audio-visual signals from a large quantities of unlabeled videos. Quantitative and qualitative evaluations show that comparing to previous models that rely on visual appearance cues, our motion based system improves performance in separating musical instrument sounds. Furthermore, it separates sound components from duets of the same category of instruments, a challenging problem that has not been addressed before.

Please cite our work using the BibTeX below.

@article{DBLP:journals/corr/abs-1904-05979,

author = {Hang Zhao and

Chuang Gan and

Wei{-}Chiu Ma and

Antonio Torralba},

title = {The Sound of Motions},

journal = {CoRR},

volume = {abs/1904.05979},

year = {2019},

url = {http://arxiv.org/abs/1904.05979},

archivePrefix = {arXiv},

eprint = {1904.05979},

timestamp = {Thu, 25 Apr 2019 13:55:01 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1904-05979.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}